Next: Entropy

Up: Statistical thermodynamics

Previous: Mechanical interaction between macrosystems

Consider two systems,  and

and  , which can interact by exchanging heat energy

and

doing work on one another. Let the system

, which can interact by exchanging heat energy

and

doing work on one another. Let the system  have energy

have energy  and adjustable external

parameters

and adjustable external

parameters

. Likewise, let the

system

. Likewise, let the

system  have energy

have energy  and adjustable

external parameters

and adjustable

external parameters

. The combined system

. The combined system

is

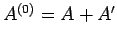

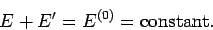

assumed to be isolated. It follows from the first law of thermodynamics that

is

assumed to be isolated. It follows from the first law of thermodynamics that

|

(198) |

Thus, the energy  of system

of system  is determined once the energy

is determined once the energy  of

system

of

system  is given, and vice versa. In fact,

is given, and vice versa. In fact,  could be regarded as

a function of

could be regarded as

a function of  . Furthermore, if the two systems can interact mechanically then,

in general, the parameters

. Furthermore, if the two systems can interact mechanically then,

in general, the parameters  are some function of the parameters

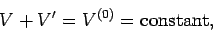

are some function of the parameters  . As

a simple example, if the two systems are separated by a movable partition in

an enclosure of fixed volume

. As

a simple example, if the two systems are separated by a movable partition in

an enclosure of fixed volume  , then

, then

|

(199) |

where  and

and  are the volumes of systems

are the volumes of systems  and

and  , respectively.

, respectively.

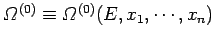

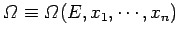

The total number of microstates accessible to  is clearly a function of

is clearly a function of

and the parameters

and the parameters  (where

(where  runs from 1 to

runs from 1 to  ), so

), so

.

We have already demonstrated (in

Sect. 5.2) that

.

We have already demonstrated (in

Sect. 5.2) that

exhibits a very pronounced maximum at one particular

value of the energy

exhibits a very pronounced maximum at one particular

value of the energy

when

when  is varied but the external parameters are held constant.

This behaviour comes about because of the very strong,

is varied but the external parameters are held constant.

This behaviour comes about because of the very strong,

|

(200) |

increase in the number of accessible microstates of  (or

(or  )

with energy. However, according to Sect. 3.8, the number of

accessible microstates exhibits a similar strong increase with

the volume, which is a typical external parameter, so that

)

with energy. However, according to Sect. 3.8, the number of

accessible microstates exhibits a similar strong increase with

the volume, which is a typical external parameter, so that

|

(201) |

It follows that the variation of

with a typical parameter

with a typical parameter  ,

when all the other parameters and the energy are held constant, also exhibits

a very

sharp maximum at some particular

value

,

when all the other parameters and the energy are held constant, also exhibits

a very

sharp maximum at some particular

value

. The equilibrium situation

corresponds to the configuration of maximum probability, in which virtually all

systems

. The equilibrium situation

corresponds to the configuration of maximum probability, in which virtually all

systems  in the ensemble have values of

in the ensemble have values of  and

and  very close

to

very close

to  and

and

. The mean values of these quantities are

thus given by

. The mean values of these quantities are

thus given by

and

and

.

.

Consider a quasi-static process in which the system  is brought from an equilibrium

state described by

is brought from an equilibrium

state described by  and

and

to an infinitesimally different

equilibrium state described by

to an infinitesimally different

equilibrium state described by

and

and

. Let us calculate the resultant change in the

number of microstates accessible to

. Let us calculate the resultant change in the

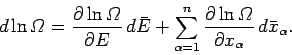

number of microstates accessible to  . Since

. Since

, the change in

, the change in

follows from standard mathematics:

follows from standard mathematics:

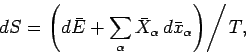

|

(202) |

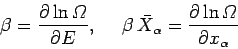

However, we have previously demonstrated that

|

(203) |

[from Eqs. (186) and (197)],

so Eq. (202) can be written

|

(204) |

Note that the temperature parameter  and the mean conjugate forces

and the mean conjugate forces

are only well-defined for equilibrium states. This is

why we are only considering quasi-static changes

in which the two systems are always

arbitrarily close to equilibrium.

are only well-defined for equilibrium states. This is

why we are only considering quasi-static changes

in which the two systems are always

arbitrarily close to equilibrium.

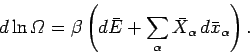

Let us rewrite Eq. (204)

in terms of the thermodynamic temperature  ,

using the relation

,

using the relation

. We obtain

. We obtain

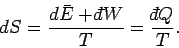

|

(205) |

where

|

(206) |

Equation (205) is a differential relation which enables us to calculate

the quantity

as a function of the mean energy

as a function of the mean energy  and the mean external parameters

and the mean external parameters

, assuming that we can calculate the temperature

, assuming that we can calculate the temperature  and mean

conjugate forces

and mean

conjugate forces

for each equilibrium state. The function

for each equilibrium state. The function

is termed the entropy of system

is termed the entropy of system  . The word

entropy is derived from the Greek en+trepien, which means ``in change.''

The reason for this etymology

will become apparent presently. It can be seen from Eq. (206)

that the entropy is merely a parameterization

of the number of accessible microstates.

Hence, according to statistical mechanics,

. The word

entropy is derived from the Greek en+trepien, which means ``in change.''

The reason for this etymology

will become apparent presently. It can be seen from Eq. (206)

that the entropy is merely a parameterization

of the number of accessible microstates.

Hence, according to statistical mechanics,

is essentially

a measure of the relative probability

of a state characterized by values of the mean energy and mean external parameters

is essentially

a measure of the relative probability

of a state characterized by values of the mean energy and mean external parameters

and

and

, respectively.

, respectively.

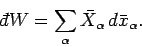

According to Eq. (129), the net amount of work performed during a quasi-static

change is given by

|

(207) |

It follows from Eq. (205) that

|

(208) |

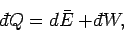

Thus, the thermodynamic temperature  is the integrating factor for the

first law of thermodynamics,

is the integrating factor for the

first law of thermodynamics,

|

(209) |

which converts the inexact differential

into the exact

differential

into the exact

differential  (see Sect. 4.5).

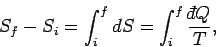

It follows that the entropy difference between any two macrostates

(see Sect. 4.5).

It follows that the entropy difference between any two macrostates

and

and  can be written

can be written

|

(210) |

where the integral is evaluated for any process through which the system is brought

quasi-statically via a sequence of near-equilibrium configurations

from its initial to its final macrostate. The process has to be quasi-static

because the temperature  , which appears in the integrand, is only well-defined

for an equilibrium state. Since the left-hand side of the above equation only depends

on the initial and final states, it follows that the integral on the right-hand side

is independent of the particular sequence of quasi-static changes used to get

from

, which appears in the integrand, is only well-defined

for an equilibrium state. Since the left-hand side of the above equation only depends

on the initial and final states, it follows that the integral on the right-hand side

is independent of the particular sequence of quasi-static changes used to get

from  to

to  . Thus,

. Thus,

is independent of the

process (provided that it is quasi-static).

is independent of the

process (provided that it is quasi-static).

All of the concepts which we have encountered up to now in this course, such

as temperature, heat, energy, volume, pressure, etc., have been fairly

familiar to us

from other branches of Physics.

However, entropy, which turns out to be of crucial importance

in thermodynamics, is something quite new. Let us consider the following

questions. What does the entropy of a system actually signify? What use is

the concept of entropy?

Next: Entropy

Up: Statistical thermodynamics

Previous: Mechanical interaction between macrosystems

Richard Fitzpatrick

2006-02-02

![]() is clearly a function of

is clearly a function of

![]() and the parameters

and the parameters ![]() (where

(where ![]() runs from 1 to

runs from 1 to ![]() ), so

), so

![]() .

We have already demonstrated (in

Sect. 5.2) that

.

We have already demonstrated (in

Sect. 5.2) that

![]() exhibits a very pronounced maximum at one particular

value of the energy

exhibits a very pronounced maximum at one particular

value of the energy

![]() when

when ![]() is varied but the external parameters are held constant.

This behaviour comes about because of the very strong,

is varied but the external parameters are held constant.

This behaviour comes about because of the very strong,

![]() is brought from an equilibrium

state described by

is brought from an equilibrium

state described by ![]() and

and

![]() to an infinitesimally different

equilibrium state described by

to an infinitesimally different

equilibrium state described by

![]() and

and

![]() . Let us calculate the resultant change in the

number of microstates accessible to

. Let us calculate the resultant change in the

number of microstates accessible to ![]() . Since

. Since

![]() , the change in

, the change in

![]() follows from standard mathematics:

follows from standard mathematics:

![]() ,

using the relation

,

using the relation

![]() . We obtain

. We obtain