Matrix eigenvalue theory

Suppose that  is

a real symmetric square matrix of dimension

is

a real symmetric square matrix of dimension  . If follows that

. If follows that

and

and

, where

, where  denotes a complex conjugate, and

denotes a complex conjugate, and  denotes a transpose.

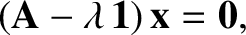

Consider the matrix equation

denotes a transpose.

Consider the matrix equation

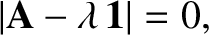

|

(A.144) |

Any column vector  that satisfies this equation is called

an eigenvector of

that satisfies this equation is called

an eigenvector of  . Likewise, the associated number

. Likewise, the associated number  is called an eigenvalue of

is called an eigenvalue of  (Gradshteyn and Ryzhik 1980c). Let us investigate the properties of the eigenvectors and eigenvalues of a real

symmetric matrix.

(Gradshteyn and Ryzhik 1980c). Let us investigate the properties of the eigenvectors and eigenvalues of a real

symmetric matrix.

Equation (A.144) can be rearranged to give

|

(A.145) |

where  is the unit matrix. The preceding matrix equation is essentially a set of

is the unit matrix. The preceding matrix equation is essentially a set of  homogeneous

simultaneous algebraic equations for the

homogeneous

simultaneous algebraic equations for the  components of

components of  .

A well-known property of such a set of equations is that it only has a nontrivial

solution when the determinant of the associated matrix is set to zero (Gradshteyn and Ryzhik 1980c).

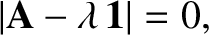

Hence, a necessary condition for the preceding set of equations to have a nontrivial

solution is that

.

A well-known property of such a set of equations is that it only has a nontrivial

solution when the determinant of the associated matrix is set to zero (Gradshteyn and Ryzhik 1980c).

Hence, a necessary condition for the preceding set of equations to have a nontrivial

solution is that

|

(A.146) |

where  denotes a determinant.

This formula is essentially an

denotes a determinant.

This formula is essentially an  th-order polynomial equation

for

th-order polynomial equation

for  . We know that such an equation has

. We know that such an equation has  (possibly complex)

roots. Hence, we conclude that there are

(possibly complex)

roots. Hence, we conclude that there are  eigenvalues, and

eigenvalues, and  associated eigenvectors, of the

associated eigenvectors, of the  -dimensional matrix

-dimensional matrix  .

.

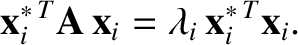

Let us now demonstrate that the  eigenvalues and eigenvectors of the real symmetric matrix

eigenvalues and eigenvectors of the real symmetric matrix

are all real. We have

are all real. We have

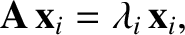

|

(A.147) |

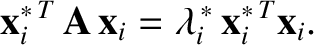

and, taking the transpose and complex conjugate,

|

(A.148) |

where  and

and  are the

are the  th eigenvector and eigenvalue

of

th eigenvector and eigenvalue

of  , respectively. Left multiplying Equation (A.147) by

, respectively. Left multiplying Equation (A.147) by

, we obtain

, we obtain

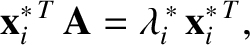

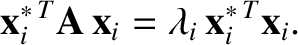

|

(A.149) |

Likewise, right multiplying Equation (A.148) by  , we get

, we get

|

(A.150) |

The difference of the previous two equations yields

|

(A.151) |

It follows that

, because

, because

(which is

(which is

in vector notation) is real and positive definite. Hence,

in vector notation) is real and positive definite. Hence,  is real.

It immediately follows that

is real.

It immediately follows that  is real.

is real.

Next, let us show that two eigenvectors corresponding to two different eigenvalues are mutually orthogonal. Let

where

. Taking the transpose of the first equation and right multiplying by

. Taking the transpose of the first equation and right multiplying by  , and left multiplying the second

equation by

, and left multiplying the second

equation by

, we obtain

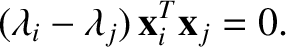

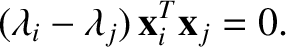

Taking the difference of the preceding two equations, we get

, we obtain

Taking the difference of the preceding two equations, we get

|

(A.156) |

Because, by hypothesis,

, it follows

that

, it follows

that

. In vector notation, this is the same

as

. In vector notation, this is the same

as

. Hence, the eigenvectors

. Hence, the eigenvectors  and

and

are mutually orthogonal.

are mutually orthogonal.

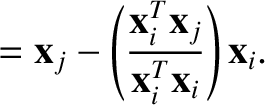

Suppose that

. In this case, we cannot conclude

that

. In this case, we cannot conclude

that

by the preceding argument. However, it is easily seen that any

linear combination of

by the preceding argument. However, it is easily seen that any

linear combination of  and

and  is an eigenvector

of

is an eigenvector

of  with eigenvalue

with eigenvalue  . Hence, it is possible

to define two new eigenvectors of

. Hence, it is possible

to define two new eigenvectors of  , with the eigenvalue

, with the eigenvalue

, that are mutually orthogonal. For instance,

, that are mutually orthogonal. For instance,

It should be clear that this argument can be generalized to deal with any

number of eigenvalues that take the same value.

In conclusion, a real symmetric  -dimensional matrix

possesses

-dimensional matrix

possesses  real eigenvalues, with

real eigenvalues, with  associated real eigenvectors,

that are, or can be chosen to be, mutually orthogonal.

associated real eigenvectors,

that are, or can be chosen to be, mutually orthogonal.

is

a real symmetric square matrix of dimension

is

a real symmetric square matrix of dimension  . If follows that

. If follows that

and

and

, where

, where  denotes a complex conjugate, and

denotes a complex conjugate, and  denotes a transpose.

Consider the matrix equation

Any column vector

denotes a transpose.

Consider the matrix equation

Any column vector  that satisfies this equation is called

an eigenvector of

that satisfies this equation is called

an eigenvector of  . Likewise, the associated number

. Likewise, the associated number  is called an eigenvalue of

is called an eigenvalue of  (Gradshteyn and Ryzhik 1980c). Let us investigate the properties of the eigenvectors and eigenvalues of a real

symmetric matrix.

(Gradshteyn and Ryzhik 1980c). Let us investigate the properties of the eigenvectors and eigenvalues of a real

symmetric matrix.

is the unit matrix. The preceding matrix equation is essentially a set of

is the unit matrix. The preceding matrix equation is essentially a set of  homogeneous

simultaneous algebraic equations for the

homogeneous

simultaneous algebraic equations for the  components of

components of  .

A well-known property of such a set of equations is that it only has a nontrivial

solution when the determinant of the associated matrix is set to zero (Gradshteyn and Ryzhik 1980c).

Hence, a necessary condition for the preceding set of equations to have a nontrivial

solution is that

.

A well-known property of such a set of equations is that it only has a nontrivial

solution when the determinant of the associated matrix is set to zero (Gradshteyn and Ryzhik 1980c).

Hence, a necessary condition for the preceding set of equations to have a nontrivial

solution is that

denotes a determinant.

This formula is essentially an

denotes a determinant.

This formula is essentially an  th-order polynomial equation

for

th-order polynomial equation

for  . We know that such an equation has

. We know that such an equation has  (possibly complex)

roots. Hence, we conclude that there are

(possibly complex)

roots. Hence, we conclude that there are  eigenvalues, and

eigenvalues, and  associated eigenvectors, of the

associated eigenvectors, of the  -dimensional matrix

-dimensional matrix  .

.

eigenvalues and eigenvectors of the real symmetric matrix

eigenvalues and eigenvectors of the real symmetric matrix

are all real. We have

are all real. We have

and

and  are the

are the  th eigenvector and eigenvalue

of

th eigenvector and eigenvalue

of  , respectively. Left multiplying Equation (A.147) by

, respectively. Left multiplying Equation (A.147) by

, we obtain

, we obtain

, we get

, we get

, because

, because

(which is

(which is

in vector notation) is real and positive definite. Hence,

in vector notation) is real and positive definite. Hence,  is real.

It immediately follows that

is real.

It immediately follows that  is real.

is real.

. Taking the transpose of the first equation and right multiplying by

. Taking the transpose of the first equation and right multiplying by  , and left multiplying the second

equation by

, and left multiplying the second

equation by

, we obtain

, we obtain

, it follows

that

, it follows

that

. In vector notation, this is the same

as

. In vector notation, this is the same

as

. Hence, the eigenvectors

. Hence, the eigenvectors  and

and

are mutually orthogonal.

are mutually orthogonal.

. In this case, we cannot conclude

that

. In this case, we cannot conclude

that

by the preceding argument. However, it is easily seen that any

linear combination of

by the preceding argument. However, it is easily seen that any

linear combination of  and

and  is an eigenvector

of

is an eigenvector

of  with eigenvalue

with eigenvalue  . Hence, it is possible

to define two new eigenvectors of

. Hence, it is possible

to define two new eigenvectors of  , with the eigenvalue

, with the eigenvalue

, that are mutually orthogonal. For instance,

, that are mutually orthogonal. For instance,

-dimensional matrix

possesses

-dimensional matrix

possesses  real eigenvalues, with

real eigenvalues, with  associated real eigenvectors,

that are, or can be chosen to be, mutually orthogonal.

associated real eigenvectors,

that are, or can be chosen to be, mutually orthogonal.